Introduction

Recurrent Neural Networks (RNN) by design are intrinsically suited for analyzing sequences of data, such as genomes, time series, text, or even handwriting. In industry, they are found processing sequential data from stock markets or sensors, while in the digital world, they power applications like language modeling, language translation, text generation, speech recognition, video tagging, and image description generation.

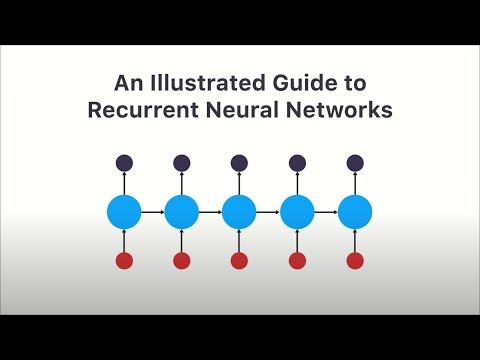

One defining feature of RNNs is their internal memory, supported by recurrent units that capture information from previous time steps, effectively incorporating past input into current and future computations. Unlike feedforward networks, recurrent networks leverage information from previous inputs, making them particularly suitable for processing sequential data.

Single input, single output, or multiple outputs, an RNN efficiently handles different configurations and the nature of the task, whether it involves forecasting the future input or classifying the input sequence.

RNN’s are known to suffer from issues like vanishing and exploding gradients when trained with long sequences. These issues are mitigated to some extent by RNN variants like Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) networks, which introduce gating mechanisms to better manage information flow.

What are recurrent neural networks?

Recurrent Neural Networks are a class of artificial neural networks designed to recognize patterns in sequences of data, such as text, genomes, handwriting, or the spoken word. They are called “recurrent” because they perform the same task for every element in a sequence, with the output depending on the previous computations. This is different from traditional neural networks, which process inputs independently.

Think of RNNs as a person reading a sentence. The person doesn’t start fresh with each new word – they understand the sentence’s meaning based on the context provided by the words they’ve already read. Similarly, RNNs can use their reasoning about previous events in the sequence to inform later ones. This feature makes them extraordinarily powerful for tasks involving sequential data.

The training process of RNNs employs techniques such as Backpropagation Through Time (BPTT) and Gradient Descent. The computation of error gradients and partial derivatives is fundamental to this learning process.

To illustrate this with a diagram, you would start by drawing a standard feed-forward neural network but then add arrows looping from the output of each neuron back to its input. This represents the recurrent nature of the RNN.