Introduction

Computer vision is a fascinating field that has the potential to solve real-world problems and drive advancements in various industries. It combines cutting-edge technologies like statistical mathematics, computer graphics, and artificial intelligence to process and analyze images and videos. With the ability to recognize patterns, track objects, and process visual data, computer vision has the potential to revolutionize industries like healthcare, transportation, and security, making it a cool and exciting area of technology.

Before you get started innovating the world in your next computer vision project, you might want to consider the ways to optimize your model before you even build it. That’s the heavy lifting commonly done by data preprocessing and dimensionality reduction.

Preprocessing and dimensionality reduction are important steps in computer vision for several reasons:

- Improving data quality: Preprocessing helps to clean and transform the raw data into a more usable format. This can include removing noise and outliers, correcting for inconsistencies, and standardizing the data.

- Increasing efficiency: Preprocessing and dimensionality reduction can help to speed up the training of machine learning algorithms by reducing the number of features in the data. This can also help to reduce the risk of overfitting, which can occur when the algorithm tries to fit too closely to the training data.

- Improving performance: Dimensionality reduction can help to improve the performance of machine learning algorithms by removing redundant features, making the features more distinguishable, and reducing the complexity of the data. This can help to make the algorithms more robust and reliable.

- Reducing computational requirements: Preprocessing and dimensionality reduction can also help to reduce the computational requirements of machine learning algorithms. By reducing the number of features, the algorithms can process the data faster and require less memory.

If you think you may need to preprocess your images and reduce the dimensionality, you can get two birds with one stone with the whitening approaches called PCA-whitening and ZCA-whitening. We’ll take a look at both approaches in a short python example. Note: There is some speculation that PCA/ZCA-Whitening is performed by the retina.

Also Read: Top 20 Machine Learning Algorithms Explained

Data matrix and covariance matrix

There are two concepts which make PCA and ZCA whitening possible: the data matrix and the covariance matrix.

The data matrix (image) is transformed to have a covariance matrix with the identity matrix. This is done by transforming the data matrix using a linear transformation, which involves multiplying the data matrix by the eigenvectors of the covariance matrix. This transformation results in a whitened data matrix with zero mean and a covariance matrix equal to the identity matrix. The whitened data matrix can then be used to train machine learning algorithms, which can improve their performance and reduce the risk of overfitting.

Let’s start with some definitions so we can refer to them as we continue learning about these cool techniques.

Data Matrix:

The data matrix is a matrix that represents the input data. It is typically a n x d matrix, where n is the number of data samples and d is the number of features in each sample. The data matrix is used to calculate the covariance matrix.

Covariance Matrix:

The covariance matrix is a d x d matrix that summarizes the relationships between the features in the data matrix. It represents the covariance between each pair of features and is calculated as the normalized inner product of the data matrix. The covariance matrix is used to perform PCA, which involves finding the principal components of the covariance matrix.

Identity matrix:

The identity matrix is a diagonal matrix where the main diagonal elements are all 1s and all other elements are 0s. It is often used as a scaling factor or as a neutral element in matrix operations. In whitening, it serves as the baseline for transforming the data to have zero mean and unit covariance.

Eigenvectors:

An eigenvector or eigenvector matricesis a vector or (matrix of vectors) that maintains its direction under a linear transformation represented by a matrix and is associated with a scalar multiple known as the eigenvalue. For a given matrix, there may be one or more eigenvectors, each with its corresponding eigenvalue. Eigenvectors are used to find the principal components of a covariance matrix, which can be used to reduce the dimensionality of the data and improve the performance of machine learning algorithms. In PCA, this is provided by the correlation matrices.

PCA-whitening

PCA (Principal Component Analysis) whitening aims to reduce the redundancy in the data by decorrelating the features and rescaling them to have equal variance. The idea behind PCA whitening is to transform the data so that it has zero mean and identity covariance matrix.

The process of PCA whitening can be summarized as follows:

- Center the data: Subtract the mean of the data from each feature to center the data around the origin.

- Compute the covariance matrix: Calculate the covariance matrix of the centered data to get a measure of the relationships between the features.

- Compute the eigenvectors and eigenvalues: Calculate the eigenvectors and eigenvalues of the covariance matrix, which give information about the directions and magnitudes of the principal components of the data.

- De-correlate the data: Project the centered data onto the eigenvectors to obtain the principal components, which are uncorrelated with each other.

- Re-scale the data: Divide the principal components by the square root of the corresponding eigenvalues to re-scale the features to have equal variance.

ZCA-whitening

ZCA (Zero-Phase Component Analysis) whitening is , as you guessed, similar to PCA (Principal Component Analysis) whitening. The main difference between ZCA and PCA whitening is that ZCA whitening preserves the original structure of the data while de-correlating and re-scaling the features.

The process of ZCA whitening can be summarized as follows:

- Center the data: Subtract the mean of the data from each feature to center the data around the origin.

- Compute the covariance matrix: Calculate the covariance matrix of the centered data to get a measure of the relationships between the features.

- Compute the eigenvectors and eigenvalues: Calculate the eigenvectors and eigenvalues of the covariance matrix, which give information about the directions and magnitudes of the principal components of the data.

- De-correlate and re-scale the data: Project the centered data onto the eigenvectors, multiply the result by the square root of the corresponding eigenvalues, and then scale the result by the square root of the eigenvalues again.

- Transform the data: Multiply the de-correlated and re-scaled data by the transpose of the eigenvectors to obtain the ZCA whitened data.

Relation between PCA-whitening and ZCA-whitening

Both ZCA and PCA whitening are commonly used preprocessing steps in computer vision and machine learning for image classification and other tasks. They both aim to remove the correlations between the features in the data and reduce the dimensionality of the data.

ZCA whitening helps to preserve the original structure of the data, while PCA whitening focuses on decorrelating and rescaling the features to make them more distinguishable. Essentially, PCA is a prior step before ZCA with extra transformation matrices. The ZCA method is a contrast to PCA, creating local filters to white a given pixel while maintaining the spatial arrangement and flattening the frequency spectrum of the image.

Which one should I use

As we can see, almost all of the steps for PCA and ZCA whitening are the same except for the last step. In PCA whitening we do a single matrix multiplication and in ZCA whitening we do an extra matrix multiplication with the eigen matrices.

If computational efficiency is a concern and the goal is to reduce the dimensionality of the data, PCA whitening may be the preferred choice. However, if preserving the structure and distribution of the data is important, ZCA whitening may be a better option.

TLDR; Use ZCA over PCA when retaining data structure is more important than reducing dimensionality.

Whitening with Numpy

First, we need some data to work with. Let’s use the Oxford 102 Flower dataset. It contains color photographs of different species of flowers. To make dealing with the data, and as good general practice, we’ll use PyTorch dataloaders. Note: The @ operator in pyton is a matrix multiplication, same as np.dot() in numpy.

You can follow along in the Google Colab notebook here.

from torchvision import datasets

from torch.utils.data import DataLoader

import matplotlib.pyplot as plt

import torch

import torchvision

from torchvision import transforms

reshape = transforms.Compose([transforms.ToTensor(), transforms.Resize((256,256))]) dataset = datasets.Flowers102( root="", download=True, transform=reshape

) dataloader = Dataloader(dataset, batch_size=1, shuffle=True)

To call an image from the dataset, we can simply call…

image, labels = next(iter(dataloader))

If we want to see an image we can…

img = image[0].squeeze()

plt.imshow(img.permute(1,2,0))

plt.show()

Now we can do PCA and ZCA whitening adapted from here.

import numpy as np

from numpy import matlib ## whitening function

#function to do steps 1-4 and then PCA and ZCA whitening

def shared_steps(x, chan=0, PCA=True): #use only first color channel x = np.asarray(x[0,chan]) #center data avg = x.mean(axis=0) x = x - np.matlib.repmat(avg, x.shape[0], 1) #centered-data covariance matrix C = x @ x.conj().T / x.shape[1] #decompose the covariance matrix eigen_matrix, eigen_values, _ = np.linalg.svd(C) #easy to compute rotation matrix (extra step) k=1

xRot = eigen_matrix[:,0:k].T.dot(x) #square root of inverse of eigen matrix em2 = np.diag(1.0 / np.sqrt(eig_values + 1e-5)) #whitening of image if PCA: newim = em2 @ eigen_matrix.conj().T * x else: newim = np.dot(np.dot(eigen_matrix, np.dot(em2, eigen_matrix.conj().T)), x) return newim #call the function to compute the covariance and get our eigens

eigen_matrix, eigen_vectors = shared_steps(image) #finish step 5 for PCA whitening PCA_whitening = PCA_ZCA(image) #finish step 5 for ZCA whitening

ZCA_whitening = PCA_ZCA(image, PCA=False)

Plotting

Putting it all together and plotting our our original images vs. our whitened images …

orig_images = []

pca_images = []

zca_images = [] for i in range(len(dataset)): image, _ = next(iter(dataloader)) orig_images.append(image[0]) ptemp = torch.zeros((3,256,256)) ztemp = torch.zeros((3,256,256)) for chan in range(3): PCA_whitening = PCA_ZCA(image, chan) ptemp[chan,...] = torch.from_numpy(PCA_whitening) ZCA_w;hitening = PCA_ZCA(image, chan, PCA=False) ztemp[chan,...] = torch.from_numpy(ZCA_whitening) pca_images.append(ptemp) zca_images.append(ztemp) #plot the original data

ogrid = torchvision.utils.make_grid(orig_images, nrows=5)

plt.imshow(ogrid.permute(1,2,0))

plt.show() #plot the pca whitened images

pgrid = torchvision.utils.make_grid(pca_images, nrows=5)

plt.imshow(pgrid.permute(1,2,0))

plt.show() #plot the zca whitened images

zgrid = torchvision.utils.make_grid(zca_images, nrows=5)

plt.imshow(zgrid.permute(1,2,0))

plt.show()

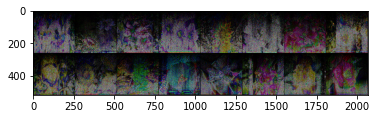

Original data:

PCA-Whitened data:

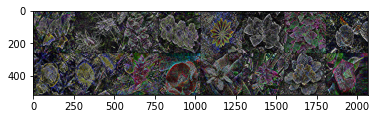

ZCA-Whitened data:

Also Read: What is a Sparse Matrix? How is it Used in Machine Learning?

Conclusion

PCA and ZCA whitening are two powerful techniques for preprocessing and dimensionality reduction in computer vision and other areas of machine learning. These techniques help to remove correlations between features, reduce the dimensionality of the data, and improve the performance of machine learning algorithms by reducing overfitting and making the features more distinguishable as layers of features are built in the neural network. While both PCA and ZCA whitening have their own pros and cons, the choice between them depends largly on the computational constraints and dimensionality of the data. Whether you’re a beginner or an experienced practitioner, understanding the basics of PCA and ZCA optimal whitening is essential for optimizing the performance of your models and advancing your knowledge in computer vision.

For a deeper dive see Stanford’s free resource here.

References

Mocquin, Yoann. “PCA-Whitening vs ZCA-Whitening : A Numpy 2d Visual.” Towards Data Science, 24 Nov. 2022, https://towardsdatascience.com/pca-whitening-vs-zca-whitening-a-numpy-2d-visual-518b32033edf. Accessed 7 Feb. 2023.

Unsupervised Feature Learning and Deep Learning Tutorial. http://ufldl.stanford.edu/tutorial/unsupervised/PCAWhitening/. Accessed 7 Feb. 2023.

“The ‘Independent Components’ of Natural Scenes Are Edge Filters.” Vision Research, vol. 37, no. 23, pp. 3327–38, https://doi.org/10.1016/S0042-6989(97)00121-1. Accessed 13 Feb. 2023.

Unsupervised Feature Learning and Deep Learning Tutorial. http://ufldl.stanford.edu/tutorial/unsupervised/PCAWhitening/. Accessed 13 Feb. 2023.

zellyn. “Deeplearning-Class-2011/Pca_2d.Py at Master · Zellyn/Deeplearning-Class-2011.” GitHub, https://github.com/zellyn/deeplearning-class-2011/blob/master/ufldl/pca_2d/pca_2d.py. Accessed 13 Feb. 2023.