Introduction:

As Artificial Intelligence heralds a new age of technological advancement, an increasing number of people are finding themselves eager to learn about this transformative field.

Whether you’re completely new to AI or simply looking to expand your knowledge, it’s important to familiarize yourself with the key terms associated with it. Here is a list of 50 essential AI terms that everyone should know.

Table of contents

What is AI?

Before we dive in, let’s start with the basics. Artificial Intelligence is the branch of computer science that deals with creating intelligent machines that can work and react like humans. AI is based on the proposition that the process of human thought can be mechanized and programmed into a machine.

Why should I learn artificial intelligence?

Artificial intelligence is already deeply ingrained in our everyday lives, and its importance will only continue to grow. As AI technology becomes more sophisticated, it will touch every aspect of our lives, from the way we work and communicate to the way we shop and even the way we drive.

Learning about AI now will not only give you a leg up in terms of understanding how the world around you works, but it will also prepare you for the many changes to come.

50 AI Terms You Should Know

- Algorithm

- Artificial intelligence

- Autonomous

- Backward chaining

- Bias

- Big data

- Bounding box

- Chatbot

- Cognitive computing

- Computational learning theory

- Corpus

- Data mining

- Data science

- Dataset

- Deep learning

- Entity annotation

- Entity extraction

- Forward chaining

- General AI

- Hyperparameter

- Intent

- Label

- Linguistic annotation

- Machine intelligence

- Machine learning

- Machine translation

- Model

- Neural network

- Natural language generation (NLG)

- Natural language processing (NLP)

- Natural language understanding (NLU)

- Overfitting

- Parameter

- Pattern recognition

- Predictive analytics

- Python

- Reinforcement learning

- Semantic annotation

- Sentiment analysis

- Strong AI

- Supervised learning

- Test data

- Training data

- Transfer learning

- Turing test

- Unsupervised learning

- Validation data

- Variance

- Variation

- Weak AI

Algorithm

First, let’s define the almighty algorithm. An algorithm is a set of rules or instructions followed to solve a problem. In the context of AI, algorithms are used to make decisions or carry out a task. For example, a search engine uses an algorithm to determine which websites to display in response to a user’s query.

Autonomous

When something is autonomous, it can function independently. Tesla’s self-driving cars are a good example of autonomous technology. Using AI, their cars can use sensors and mapping data to navigate roads without needing a human driver.

Backward chaining

Backward chaining is a type of AI reasoning that starts with the goal and works backward to find the path that will lead to that goal. This contrasts with forward chaining, which begins with data and tries to infer the goal from it.

Bias

The scientific method is supposed to be objective, but humans are not. We all have biases that can distort our thinking and lead us to make errors in judgment. These same biases can also find their way into AI systems. For example, if an algorithm is trained on biased data, it will likely produce biased results.

Big data

Big data is a term used to describe extensive data sets that may be too complex for traditional data processing methods. Big data is often characterized by the 3 V’s: volume, velocity, and variety.

For example, a company like Amazon generates huge amounts of data every day from its online sales. They need AI algorithms that can handle the volume, velocity, and variety to make sense of all this data.

Bounding box

A bounding box is a type of annotation used in image recognition. A rectangle is drawn around an object in an image to identify it. AI algorithms can then use bounding boxes to locate and identify objects in images.

Chatbot

From the words “chat” and “robot,” a chatbot is a computer program that can mimic human conversation. Chatbots are often used to provide customer service or information on a website. The AI behind the robot should be able to understand the user’s input and respond accordingly.

Cognitive computing

Cognitive computing is a type of AI that deals with simulating human thought. It is based on the idea that humans use a combination of reasoning, natural language processing, and machine learning to make decisions. By mimicking this process, cognitive computing systems can provide intelligent solutions to problems.

Computational learning theory

Computational learning theory is a branch of AI that deals with the study of algorithms that can learn from data. It is concerned with both the efficiency and accuracy of learning algorithms. For example, a learning algorithm may be efficient but not very accurate, or it may be accurate but not very efficient.

Corpus

A corpus is a dataset used in Natural Language Processing (NLP). It is a collection of text and audio data that is used to train NLP algorithms. For example, Google Translate uses a corpus of billions of words to translate between languages.

Data mining

Data mining is the process of extracting useful information from large data sets. It is often used in marketing to find trends in customer behavior. We can also use data mining to find patterns in data that can be used to make predictions.

Data science

Data science is an interdisciplinary field that deals with extracting knowledge from data. It combines statistics, computer science, and machine learning methods to make sense of data.

Dataset

Similar to a corpus, a dataset is a collection of data used for training a machine learning algorithm. A dataset can be used to train an AI system to perform a task such as an image recognition or trend prediction. For example, if you want to train a system to recognize faces, you would need a dataset of images that contain faces. The more data you have, the better the system will recognize faces.

Deep learning

Deep learning is a type of machine learning that deals with algorithms that can learn from data without being explicitly programmed. Deep learning algorithms are often based on artificial neural networks. These networks are modeled after the brain and can learn to recognize patterns in data.

Entity annotation

Entity annotation is a type tag used in Natural Language Processing (NLP). It is the process of identifying and labeling entities in text data. For example, in the sentence “John went to the store,” John would be labeled as an entity. Entity annotations help NLP algorithms understand the meaning of the text.

Entity extraction

Entity extraction is the process of identifying and extracting entities from text data. It is similar to entity annotation, but instead of labeling entities, they are extracted and stored in a database. Entity extraction can be used to create a knowledge base or dataset of information.

Forward chaining

When an AI system uses forward chaining, it starts with a set of facts and then tries to derive new facts from them until it reaches a goal. Forward chaining is often used in expert systems, a computer program that uses a set of rules to solve problems.

General AI

General AI is a type of AI that deals with systems that can perform any task that a human can. That means the system is not limited to a specific task but can learn and perform any given task. This type of AI is often seen in movies and television shows.

Hyperparameter

A hyperparameter is a parameter used to tune a machine learning algorithm. It is a value set before training the algorithm and can be used to improve its performance. For example, the learning rate is a hyperparameter that can control how fast an algorithm learns.

Intent

In the world of machine learning, intent classification is the task of determining the purpose or goal of a text utterance. For example, if someone says, “I want to buy a pizza,” the intent is to purchase something. Intent classification can be used to help chatbots and other NLP applications understand the user’s intention.

Label

Labels are part of the foundation of machine learning. A label is a value that is assigned to a data point. When an AI tries to learn from data, it does so by looking at the labels. The label tells the AI what the data point is supposed to represent. In a dataset of images, each image would have a label that tells the AI what is in the image.

Linguistic annotation

Linguistic annotation is the process of adding labels to data points in order to help a machine learning algorithm understand the data. We can do this with text, images, or other data types. For example, in an image recognition task, each image would be annotated with the label “dog” or “cat.”

Machine intelligence

A term describes a machine that can learn and perform tasks that normally require human intelligence. This includes tasks such as reasoning, natural language processing, and problem-solving.

Machine learning

A term used to describe a type of AI that deals with algorithms that can learn from data without being explicitly programmed. Machine learning algorithms are often used to automatically improve a system’s performance.

Machine translation

A term used to describe a type of AI that deals with translating text from one language to another. Machine translation systems are often used to translate documents or website content automatically.

Model

Models are very important in machine learning. A model represents data that can be used to make predictions. You can think of a model as a machine learning algorithm that has been trained on data. For example, in a classification task, the model would learn to map input data points to their correct labels.

Neural network

If comparing artificial intelligence to a human, then the Neural Network is the brain. This is a type of machine learning algorithm that is designed to simulate the way the brain works. Neural networks are often used for tasks such as image recognition and natural language processing.

Natural language generation (NLG)

NLG is a type of AI that deals with generating text from data. NLG systems are often used to generate reports or summaries from data automatically.

Natural language processing (NLP)

NLP is a type of AI that deals with understanding and processing human language. NLP systems are often used for chatbots, sentiment analysis, and text classification tasks. However, the advance of GPT-3 has shown that NLG and NLP are converging into one task.

Also Read: What is NLP?

Natural language understanding (NLU)

NLU is a type of AI that deals with understanding human language. Processing could be compared to hearing, while NLU would be absorbing the information heard. Both overlap, but also have distinct criteria.

Overfitting

Since AI “think” differently than we do, they can sometimes have issues with common sense. For example, a machine learning algorithm might learn to recognize dogs by looking at pictures of dogs that are all standing up. But if it were presented with a picture of a dog lying down, it might not be able to recognize it as a dog.

Parameter

A parameter is a value that is used to control the behavior of a machine learning algorithm. Parameters can be things like the learning rate or the number of hidden layers in a neural network.

Pattern recognition

Pattern recognition is the ability to identify patterns in data. It works by looking for similarities in data points. For example, your phone might use pattern recognition to identify unique features in your face so that it can authenticate you and unlock when you look at it.

Predictive analytics

Predictive analytics is a type of AI that deals with making predictions about future events. Predictive systems often forecast things like sales, weather, or stock prices.

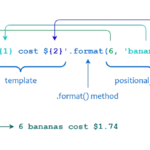

Python

A programming language that is often used for AI and machine learning. Python is a popular choice because it is easy to learn and has many libraries for data science and machine learning.

Reinforcement learning

A type of machine learning that deals with agents that learn by taking actions in an environment to maximize a reward and minimize errors. This type of learning is often used for tasks such as robotics, video games, and self-driving cars.

Semantic annotation

Semantic annotation is the process of adding meaning to data. This can be done manually, or it can be done automatically using AI. Semantic annotation is often used to improve the accuracy of machine learning algorithms.

Sentiment analysis

Sentiment analysis in artificial intelligence is the process of understanding the attitude of a speaker, writer, or another source from their text. This can be done by looking at the words used, the tone of the writing, or the text’s overall meaning.

Strong AI

Like General AI, Strong AI is an AI system with human-like intelligence. It can understand or learn any intellectual task that a human being can. For example, a strong AI should technically be able to plan for the future, understand natural language, and so on.

Supervised learning

A type of machine learning where the data is labeled and the algorithm learns from this data. Instead of trying to find patterns itself, the algorithm is given labels that tell it what the pattern looks like. This type of learning is often used for tasks such as image classification and text recognition.

Test data

Test data is data that is used to evaluate the performance of a machine learning algorithm. The test data is usually different from the training data and is typically much smaller in size.

Training data

Training data is data that is used to train a machine-learning algorithm. This is the data that the algorithm uses to find patterns and learn how to complete the task. The training data is typically a large dataset that includes many different examples of what you are trying to learn.

Transfer learning

A model that has been trained and fed knowledge on one task is reused or fine-tuned for another task, which is often done with pre-trained models. These are models that have been created by someone else and made available to the public. Transfer learning can be used to speed up the training process or improve a model’s performance.

Turing test

The Turing test is a test for determining whether or not a machine is intelligent. The test is named after Alan Turing, who proposed it in 1950. The basic idea is that if a human cannot tell the difference between a machine and another human, then the machine is said to be intelligent.

The test consists of a human judge engaging in a natural language conversation with two other participants, one of which is a machine. If the judge cannot tell which one is the machine, then the machine is said to have passed the test.

Unsupervised learning

A type of machine learning where the data is not labeled, and the algorithm has to find patterns on its own. This contrasts with supervised learning, where the information is marked, and the algorithm knows what it is looking for. Unsupervised learning is often used for tasks such as clustering and dimensionality reduction.

Also Read: What is Tokenization in NLP?

Validation data

Unlike training data, which is often unverifiable, validation data is a set of data that is used to verify the results of a machine learning algorithm. This data is typically a small dataset that includes only a few examples of the thing that you are trying to learn.

Variance

In statistics, variance measures how far a set of numbers are spread out from each other. In machine learning, variance measures how much a model will change when it is trained on different data. A model with high variance is said to overfit, meaning it has memorized the training data and does not generalize well to new data.

Weak AI

Weak AI, also known as narrow AI, is an AI system that has been designed or trained to do one specific task. For example, a weak AI might beat a human at chess or Go, but it would not be able to understand natural language or walk on two legs.

References

Chesalov, Alexander, et al. Artificial Intelligence Glossarium: 1000 Terms. Litres, 2022.

Raynor, William. International Dictionary of Artificial Intelligence. Routledge, 2020.

TIME, The Editors of. TIME Artificial Intelligence: The Future of Humankind. Time Inc. Books, 2017.